The EBU ADM Renderer (EAR) is a complete interpretation of the Audio Definition Model (ADM) format, specified in Recommendation ITU-R BS.2076-1. ADM is the recommended format for all stages and use cases within the scope of programme productions of Next Generation Audio (NGA). This repository contains a Python reference implementation of the EBU ADM Renderer.

This Renderer implementation is capable of rendering audio signals to all reproduction systems mentioned in “Advanced sound system for programme production (ITU-R BS.2051-1)”.

Further descriptions of the EAR algorithms and functionalities can be found in EBU Tech 3388.

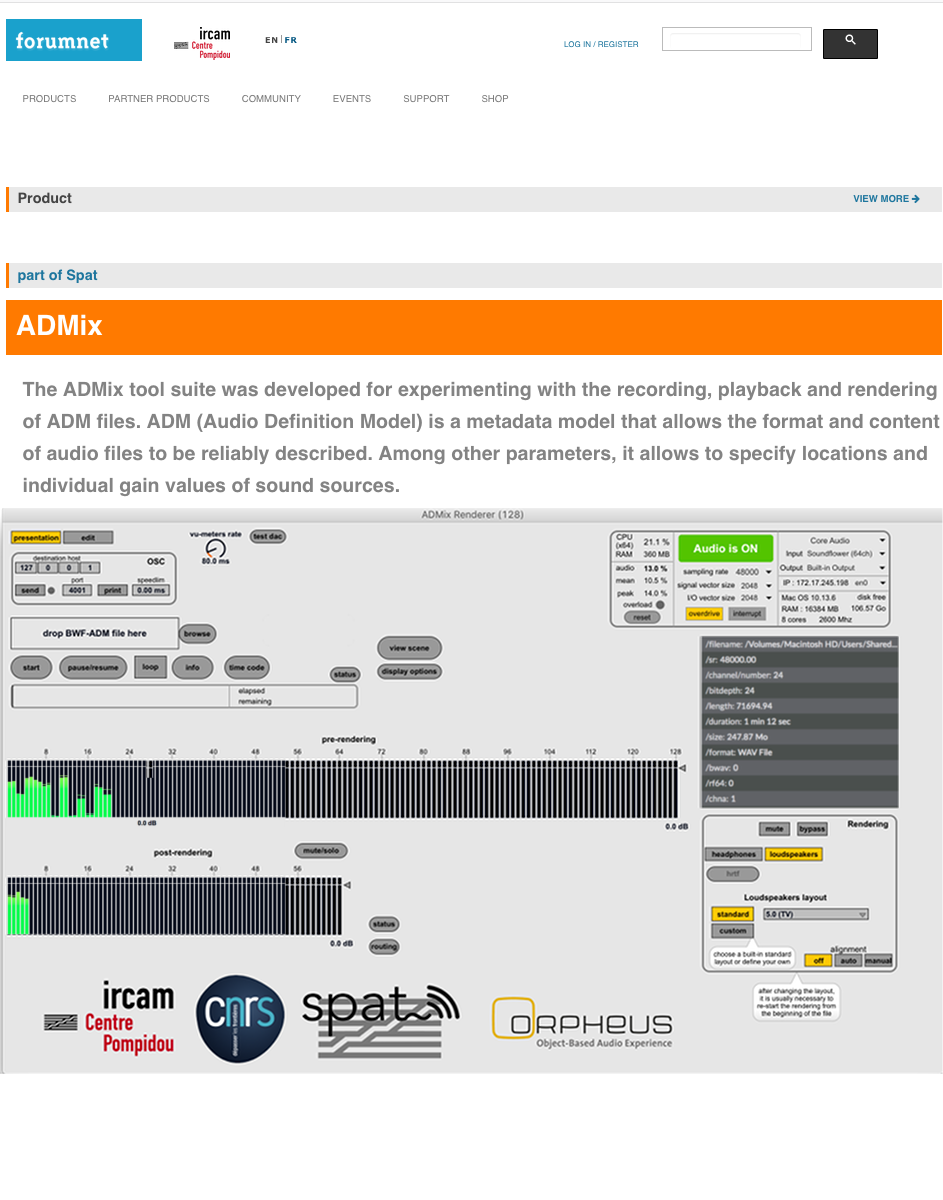

With the introduction of HTML5 and the Web Audio API, an important prerequisite was made for native rendering of object-based audio in modern browsers. Object-based audio is a revolutionary approach for creating and deploying interactive, personalised, scalable and immersive content, by representing it as a set of individual assets together with metadata describing their relationships and associations. This allows media objects to be assembled in ground-breaking ways to create new user experiences.

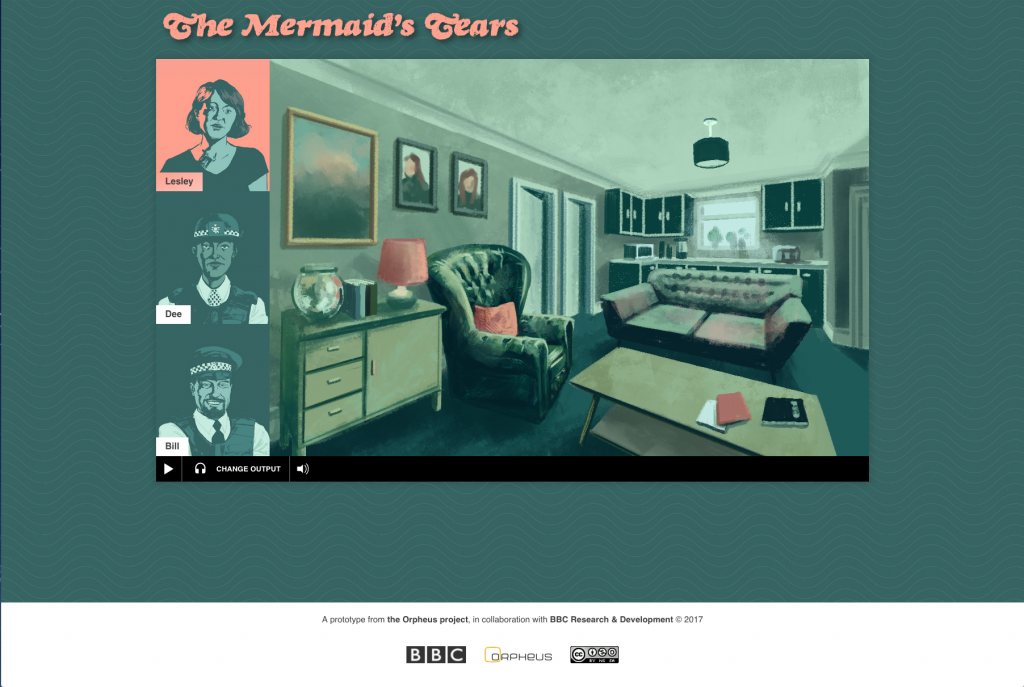

BBC R&D created this programme using the tools and protocols we developed as part of ORPHEUS, to see whether we could successfully use them as part of a real production. To make the most of the possibilities offered by this new technology, we commissioned a writer to create a radio play especially for the project. We then produced the drama by working in collaboration with BBC Radio Drama London. This production was on the BBC TASTER platform from September to December 2017.

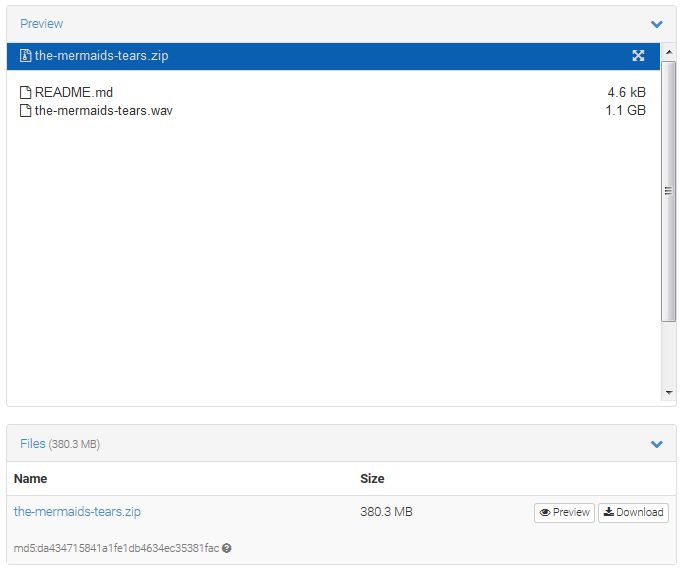

This file is an object-based audio recording of “The Mermaid’s Tears” – an interactive and immersive radio drama produced by the BBC as part of the ORPHEUS project. In the drama, you can choose to follow one of three characters (Lesley, Dee or Bill), each of which have a different audio mix. This is a BW64 file consisting of 15 audio tracks and a chunk of descriptive ADM metadata. The metadata describes 43 audio objects that make up the drama, and describes three different ways to mix those objects – one for each of the characters.

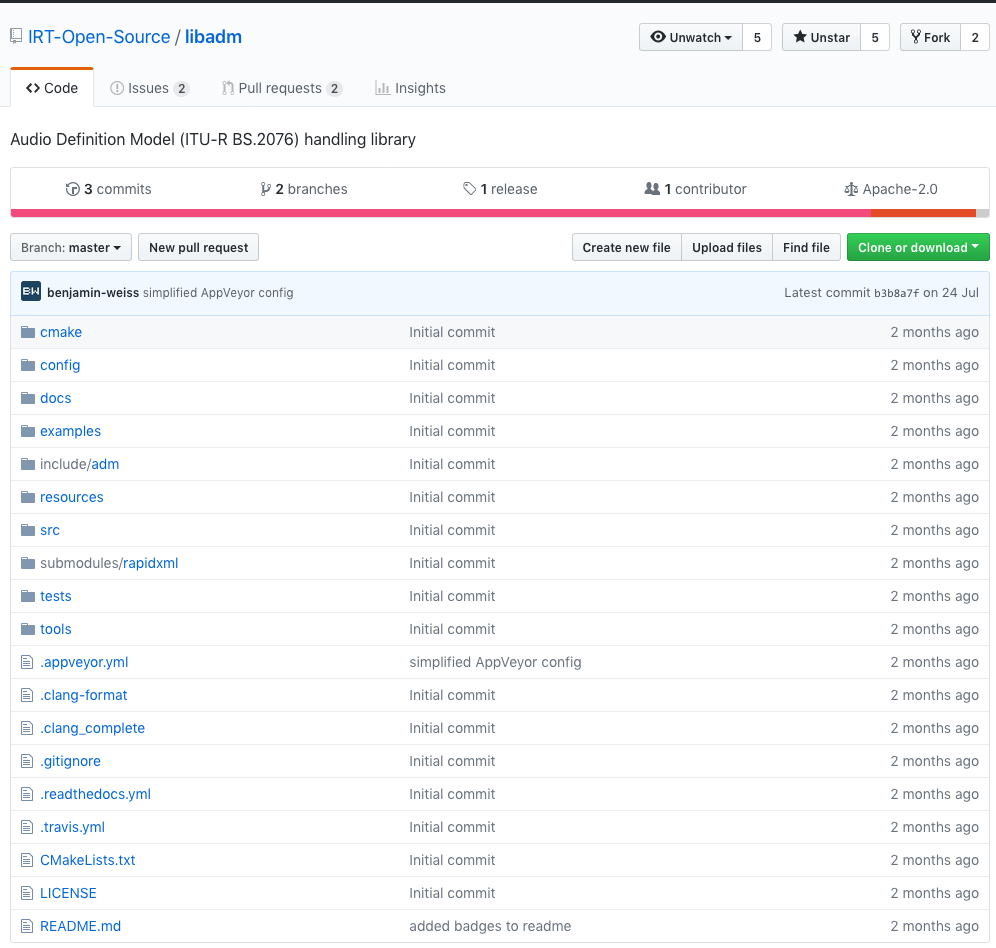

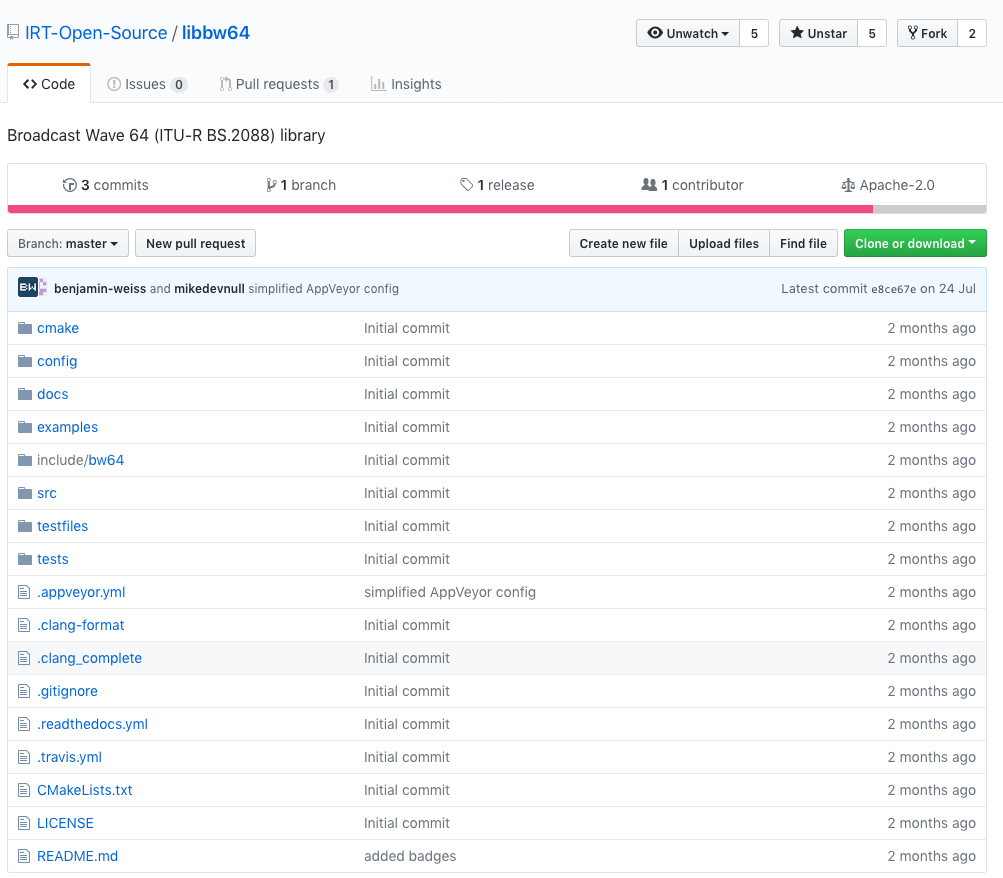

The libadm library is a modern C++11 library to parse, modify, create and write ITU-R BS.2076-1 conform XML document. It works well with the header-only library libbw64 to write ADM related applications with minimal dependencies.

The libbw64 library is a lightweight C++ header only library to read and write BW64 files. BW64 is standardised as Recommendation ITU-R BS.2088 and the successor of RF64. So it already contains necessary extensions to support files which are bigger than 4 GB. Apart from that a BW64 file is able to contain the ADM metadata and link it with the audio tracks in the file. To do that a BW64 file specifies two new RIFF chunks – the axml chunk and the chna chunk. To parse, create, modify and write the ADM metadata in the axml chunk you may use the libadm library.

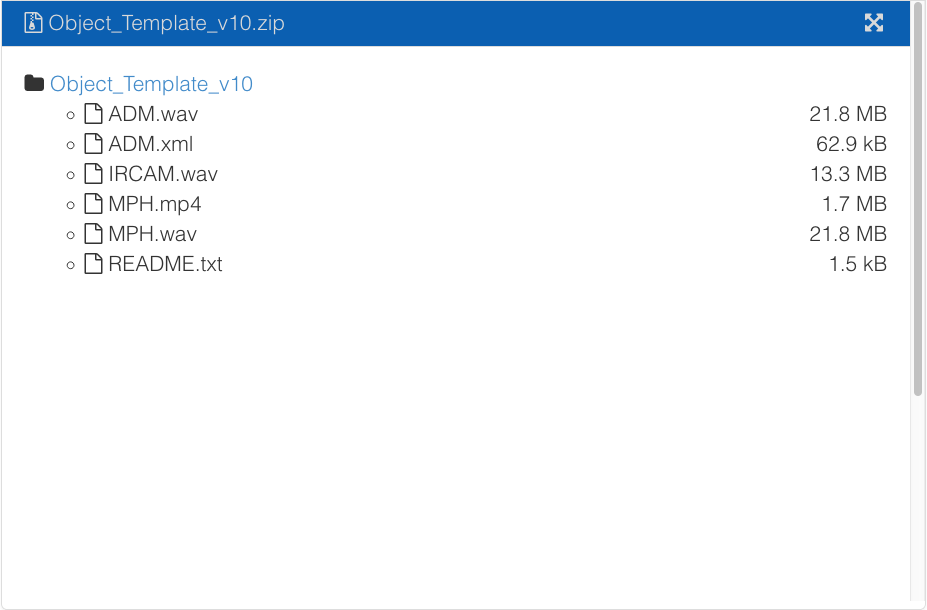

The ADM file “ADM.xml” can be used as a template for authoring ADM content that is to be encoded into MPEG-H. When following the given structure and constraints, the conversion into MPEG-H has been tested and should work.

This particular ADM template is for audio scenes that contain up to 15 dynamic objects (w/o any other formats).

Audio Content:

The content includes two objects, a speaker reading a poet and moving from left to right while his volume is reduced.

And a “humming bee” which is moving into the opposite direction while increasing its volume.

Position and gain are controlled through dynamic object metadata.

Files included:

1. ADM.wav: 2-channel WAV-file with <chna> and <amxl> chunks

2. ADM.xml: The ADM-file as included in the <amxl> chunck of (1), provided separately for convenience

3. MPH.mp4: The MP4-file after conversion and encding to MPEG-H 3D Audio

4. MPH.wav: The decoded MP4-file (3)

5. IRCAM.wav: The ADM-file (1) rendered with the IRCAM-Renderer (0.3.4).

Note: As the IRCAM-Renderer did not provide file-output, this required manual capture/truncation/normalization.

EBU Technical Report 042 provides a concrete and practical example of an object-based audio architecture and workflow, from production to broadcast and broadband delivery. This report is based on the ORPHEUS deliverable D2.4.